Is 3-D gesture control the interface of the future? That will depend upon the ingenuity of the engineers, the efficiency of the various systems and the behavior of users. Designing a workable user interface is no small task -- there are hundreds of failed products that at one time or another were going to revolutionize the way we interact with machines. For 3-D gesture systems to avoid the same fate, they'll have to be useful and reliable. That doesn't just depend on technology but user psychology.

If a particular gesture doesn't make sense to a user, he or she may not be willing to use the system as a whole. You probably wouldn't want to have to perform the "Hokey Pokey" just to change the channel -- but if you do, it's OK, we don't judge you. Creating a good system means not only perfecting the technology but also predicting how people will want to use it. That's not always easy.

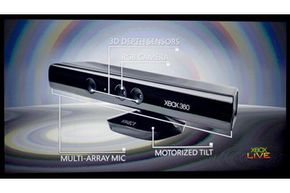

There are a few 3-D gesture systems on the market already. Microsoft's Kinect is probably the system most familiar to the average consumer. It lets you control your Xbox 360 with gestures and voice commands. In 2012, Microsoft announced plans to incorporate Kinect-like functionality into Windows 8 machines. And the hacking community has really embraced the Kinect, manipulating it for projects ranging from 3-D scanning technology to robotics.

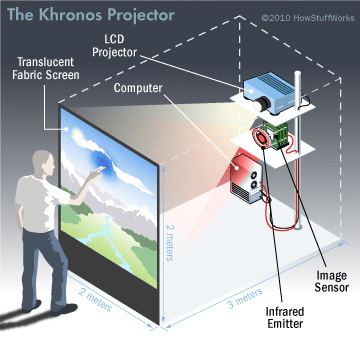

At CES 2012, several companies showcased devices that included 3-D gesture recognition. One company, SoftKinetic, demonstrated a time-of-flight system that remained accurate even when objects were just a few inches away from the camera. A time-of-flight system measures distances based on how fast light reflects off an object, based on the speed of light. If companies want to include gesture recognition functions in a computer or tablet, they'll need to rely on systems that can handle gestures made close to the lens.

In the future, we may see tablets with a form of this gesture- recognition software. Imagine propping a tablet up on your desk and placing your hands in front of it. The tablet's camera and sensors detect the location of your hands and map out a virtual keyboard. Then you can just type away on your desktop as if you have an actual keyboard under your fingertips, and the system tracks every finger movement.

The real test for 3-D gesture systems comes with 3-D displays. Adding depth to our displays gives us the opportunity to explore new ways to manipulate data. For example, imagine a 3-D display showing data arranged in the form of stacked boxes extending in three dimensions. With a 3-D gesture display, you could select a specific box even if it weren't at the top of a stack just by reaching toward the camera. These gesture and display systems could create a virtual world that is as immersive as it is flexible.

Will these systems take the place of the tried-and-true interfaces we've grown used to? If they do, it'll probably take a few years. But with the right engineering and research, they could help change the stereotypical image of the stationary computer nerd into an active data wizard.