Computers have been around for more than half a century, and yet the way most people interact with them hasn't changed much. The keyboards we use evolved from typewriters, a technology that dates back almost 150 years. Douglas Carl Engelbert demonstrated a device that we'd later call a computer mouse back in 1968 [source: MIT]. Even the graphical user interface (GUI) has been around for a while -- the first one to gain popularity in the consumer market was on the Macintosh in 1984 [source: Utah State University]. Considering the fact that computers are far more powerful today than they were 50 years ago, it's surprising that our basic interfaces haven't changed much.

Today, we're starting to see more dramatic departures from the keyboard-and-mouse interface configuration. Touchscreen devices like smartphones and tablet computers have introduced this technology -- which has been around for more than a decade -- to a wider audience. We're also making smaller computers, which necessitates new approaches to user interfaces. You wouldn't want a full-sized keyboard attached to your smartphone -- it would ruin the experience.

Advertisement

Touchscreens have introduced new techniques for computer navigation. Early touchscreens could only detect a single point of contact -- if you tried touching a display with more than one finger, it couldn't follow your movements. But today, you can find multitouch screens in dozens of computer devices. Engineers have taken advantage of this technology to develop gesture navigation. Users can execute specific commands with predetermined gestures. For example, several touchscreen devices like the Apple iPhone allow you to zoom in on a photo by placing two fingers on the screen and drawing them apart. Pinching your fingers together will zoom out on the photo.

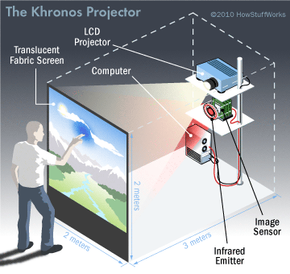

The University of Tokyo's Khronos Projector experiment combines a touch interface with new methods of navigating prerecorded video. The system consists of a projector and camera mounted behind a flexible screen. The projector displays images on the screen while the camera detects changes in the screen's tension. A user can push against the screen to affect prerecorded video -- speeding a section of the video up or slowing it down while the rest of the picture remains unaffected.

The Khronos Projector lets you view events in new configurations of space and time. Imagine a video of two people racing down the street side by side. By pressing against the screen, you could manipulate the images so that one person appears to be leading the other. By moving your hand across the screen, you could make the two people switch. Video that seemed to follow one set of rules now follows another set [source: University of Tokyo].

Interacting with a screen is just the beginning. Next, we'll look at how engineers are developing ways for us to interact with computers without touching anything at all.

Advertisement