In the 1940s, the University of Pennsylvania built the Electronic Numerical Integrator and Computer, better known as ENIAC. It was one of the earliest electronic, general-purpose computers and it was a monster. It weighed in at around 30 tons (27.2 metric tons), with half a million hardwired connections and thousands of vacuum tubes forming the circuits [source: Avery].

Skip ahead a few decades to the 1970s and the birth of the personal computer. Years of hard work on the part of computer engineers allowed us to harness the power of a computer from a home desk. Those early personal computers were primitive by today's standards -- the earliest could only store information on external disks or magnetic tape.

Advertisement

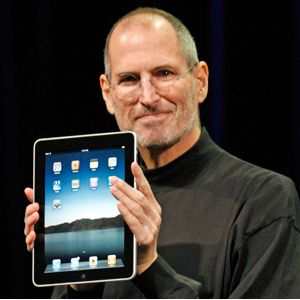

In the 1980s, we saw the first laptop computers hit store shelves. These weren't the sleek, portable computers we're used to lugging around today. They were clunky, heavy and had limited functionality. Over time, these devices would become more powerful and yet lighter and less cumbersome.

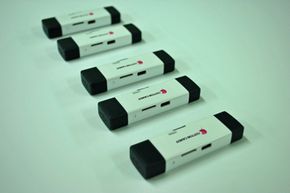

Today, you can carry a smartphone with more computing power than ENIAC. Even desktop PCs have shrunk over the years. While you can still find tower PCs designed for high-end applications, many computers are only slightly larger than a cell phone. And you can even find computers in the form factor of a USB thumb drive.

In this article, we'll take a look at little computers that are a big deal. These devices may be the size of a circuit board or even smaller. How can engineers pack a full computer on something so small?

Advertisement