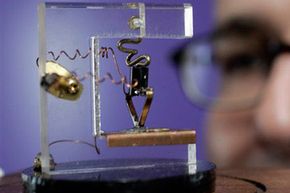

In 1965, the publication Electronics ran an article written by Dr. Gordon E. Moore, the director of research and development at Fairchild Semiconductor. Moore titled the article "Cramming more components onto integrated circuits." He observed that semiconductor companies like Fairchild could double the number of discrete components on a square inch of silicon every 12 months.

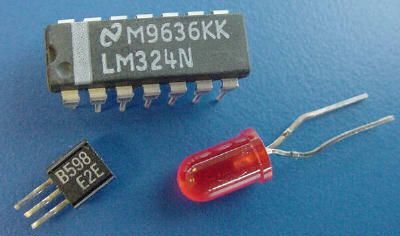

This is a type of exponential growth. A square-inch (6.5 square-centimeter) chip made in 1964 would have half the number of components -- such as transistors -- as a chip manufactured in 1965. Moore predicted this trend would continue indefinitely until chip manufacturers encountered fundamental barriers that block their progress.

Advertisement

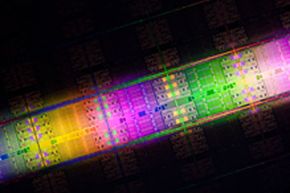

Moore's observation depended on two important factors: technological advances and the economics of mass manufacturing. For his observation to remain valid, we have to innovate and find new ways to create increasingly smaller elements onto a chip. But we also have to make sure the manufacturing process is economically viable, or there will be no way to support further development.

Today, we call Moore's observation Moore's Law. Despite the name, it's not really a law. There's no fundamental rule in the universe that guides how powerful a newly made integrated circuit will be at any given time. But Moore's Law has become something of a self-fulfilling prophecy as chip manufacturers have pushed to keep up with the predictions Dr. Moore made way back in 1965. Whether it's out of a sense of pride or simply a desire to lead in the marketplace, companies like Intel have spent billions of dollars in research and development to keep pace.

So, is this nearly 50-year-old observation still relevant?

Advertisement