One of the most exciting areas of BCI research is the development of devices that can be controlled by thoughts. Some of the applications of this technology may seem frivolous, such as the ability to control a video game by thought. If you think a remote control is convenient, imagine changing channels with your mind.

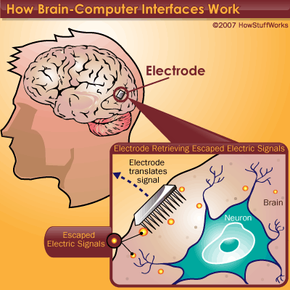

However, there's a bigger picture — devices that would allow severely disabled people to function independently. For those suffering from a spinal cord injury, something as basic as controlling a computer cursor via mental commands would represent a revolutionary improvement in quality of life. But how do we turn those tiny voltage measurements into the movement of a robotic arm?

Early research used monkeys with implanted electrodes. The monkeys used a joystick to control a robotic arm. Scientists measured the signals coming from the electrodes. Eventually, they changed the controls so that the robotic arm was being controlled only by the signals coming form the electrodes, not the joystick.

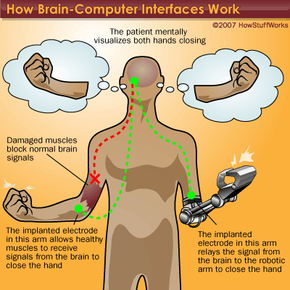

A more difficult task is interpreting the brain signals for movement in someone who can't physically move their own arm. With a task like that, the subject must "train" to use the device. With an EEG or implant in place, the subject would visualize closing his or her right hand. After many trials, the software can learn the signals associated with the thought of hand-closing. Software connected to a robotic hand is programmed to receive the "close hand" signal and interpret it to mean that the robotic hand should close. At that point, when the subject thinks about closing the hand, the signals are sent and the robotic hand closes.

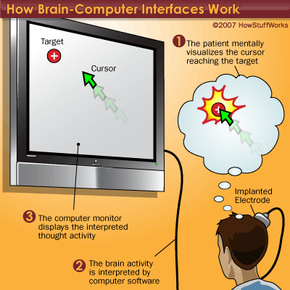

A similar method is used to manipulate a computer cursor, with the subject thinking about forward, left, right and back movements of the cursor. With enough practice, users can gain enough control over a cursor to draw a circle, access computer programs and control a TV [source: Ars Technica]. It could theoretically be expanded to allow users to "type" with their thoughts.

Once the basic mechanism of converting thoughts to computerized or robotic action is perfected, the potential uses for the technology are almost limitless. Instead of a robotic hand, disabled users could have robotic braces attached to their own limbs, allowing them to move and directly interact with the environment. This could even be accomplished without the "robotic" part of the device. Signals could be sent to the appropriate motor control nerves in the hands, bypassing a damaged section of the spinal cord and allowing actual movement of the subject's own hands.

On the next page we'll learn about cochlear implants and artificial eye development.