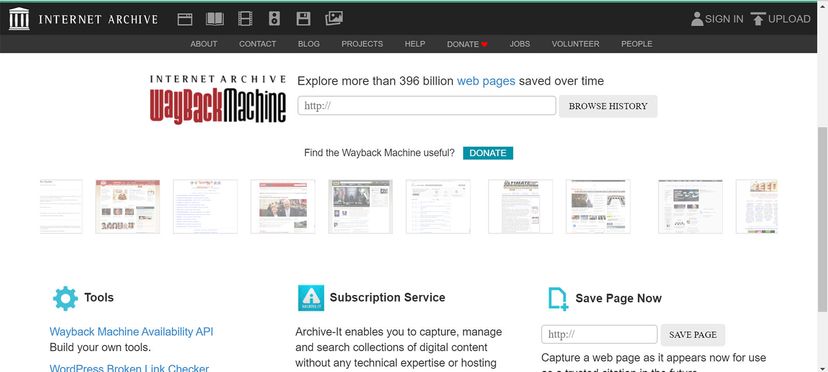

The Wayback Machine is the brainchild of Brewster Kahle and Bruce Gilliat, who also founded the Internet Archive, a digital library of websites, books, audio and video recordings and software programs. Both projects are San Francisco-based nonprofits. The Wayback Machine is a project of the Internet Archive. (Kahle and Gilliat also created Alexa Internet which analyzes web traffic patterns and was sold to Amazon.)

"They [Kahle and Gilliat] had started to archive webpages in 1996, and in 2001 launched the Wayback Machine to support discovery and playback of those archived web resources," says Graham in a recent email interview. "And, yes, the name was inspired by the 1960s cartoon series 'The Rocky and Bullwinkle Show.' In the cartoon the WABAC Machine (note the spelling difference) was a plot device used to transport the characters Mr. Peabody and Sherman back in time to visit important events in human history."

In a world where there are more than 1.7 billion websites, with the number climbing dramatically by the day, how can anyone possibly hope to catalog so many webpages? The Wayback Machine uses what are called "crawlers," a type of software that automatically moves through the web, taking snapshots of billions of sites as it goes. Some of the process is automated, but many of the requests are generated manually by a network of librarians, who prioritize certain types of sites that they think are important to preserve for posterity and for future generations.

The crawlers don't capture every iteration of sites. The frequency of snapshots differs by the site's importance – very significant sites might be recorded every few hours. Others might be logged weeks or months apart. Most aren't logged at all (so don't worry, that embarrassing fan website you made in high school is probably long gone by now). Wayback Machine aims to capture snapshots of important content, say, the breaking news headlines created by major media companies.

Furthermore, it doesn't necessarily recreate the entire site, and it doesn't preserve the data in a way that you'd experience it with your browser. It may only capture a few images of a few pages, and not preserve content that's linked to other sites outside the domain.